New analysis goals to revolutionize video accessibility for blind or low-vision (BLV) viewers with an AI-powered system that offers customers the flexibility to discover content material interactively. The progressive system, detailed in a current paper, addresses important gaps in standard audio descriptions (AD), providing an enriched and immersive video viewing expertise.

New analysis goals to revolutionize video accessibility for blind or low-vision (BLV) viewers with an AI-powered system that offers customers the flexibility to discover content material interactively. The progressive system, detailed in a current paper, addresses important gaps in standard audio descriptions (AD), providing an enriched and immersive video viewing expertise.

“Though movies have develop into an essential medium to entry data and entertain, BLV folks usually discover them much less accessible,” mentioned lead creator Zheng Ning, a PhD in Laptop Science and Engineering on the College of Notre Dame. “With AI, we are able to construct an interactive system to extract layered data from movies and allow customers to take an lively position in consuming video content material by way of their restricted imaginative and prescient, auditory notion, and tactility.”

ADs present spoken narration of visible parts in movies and are essential for accessibility. Nevertheless, standard static descriptions usually omit particulars and focus totally on offering data that helps customers perceive the content material, somewhat than expertise it. Plus, concurrently consuming and processing the unique sound and the audio from ADs might be mentally taxing, decreasing person engagement.

Researchers from the College of Notre Dame, College of California San Diego, College of Texas at Dallas, and College of Wisconsin-Madison developed a brand new AI-powered system addressing these challenges.

Known as the System for Offering Interactive Content material for Accessibility (SPICA), the instrument permits customers to interactively discover video content material by way of layered ADs and spatial sound results.

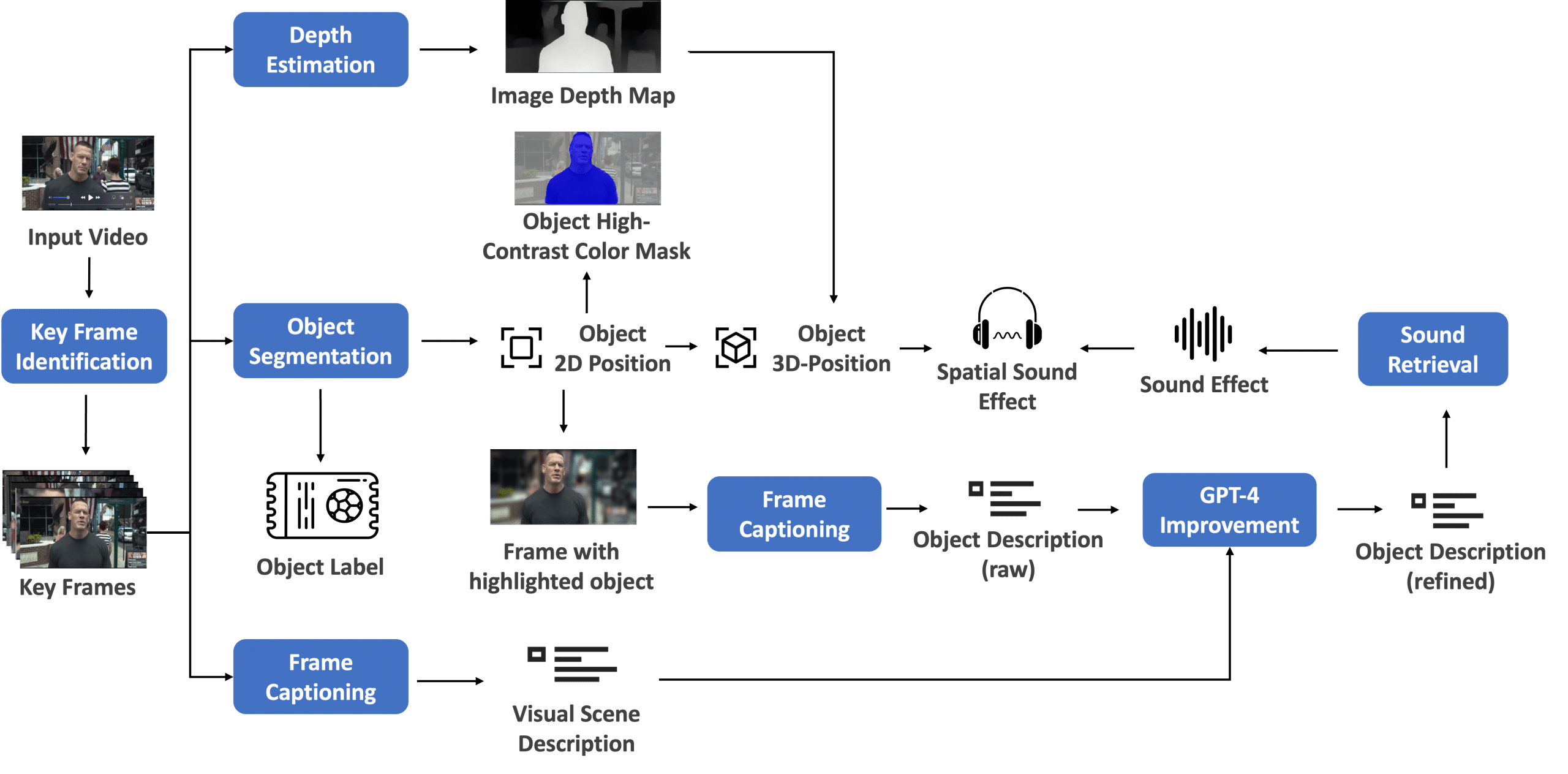

The machine studying pipeline begins with scene evaluation to establish key frames, adopted by object detection and segmentation to pinpoint important objects inside every body. These objects are then described intimately utilizing a refined picture captioning mannequin and GPT-4 for consistency and comprehensiveness.

Video 1. A demo of SPICA with interactivity for BLV customers to discover the video by scrolling over objects

The pipeline additionally retrieves spatial sound results for every object, utilizing their 3D positions to boost spatial consciousness. Depth estimation additional refines the 3D positioning of objects, and the frontend interface permits customers to discover these frames and objects interactively, utilizing contact or keyboard inputs, with high-contrast overlays aiding these with residual imaginative and prescient.

Determine 1. The machine studying pipeline consists of a number of modules for producing layered frame-level descriptions, object-level descriptions, high-contrast coloration masks, and spatial sound results

SPICA runs on an NVIDIA RTX A6000 GPU, which the group was awarded as a recipient of the NVIDIA Tutorial {Hardware} Grant Program.

“NVIDIA expertise is a vital part behind the system, providing a secure and environment friendly platform for operating these computational fashions, considerably decreasing the effort and time to implement the system,” mentioned Ning.

This superior integration of laptop imaginative and prescient and pure language processing methods permits BLV customers to interact with video content material in a extra detailed, versatile, and immersive means. Quite than being given predefined ADs per body, customers actively discover particular person objects throughout the body by way of a contact interface or a display reader.

SPICA additionally augments present ADs with interactive parts, spatial sound results, and detailed object descriptions, all generated by way of an audio-visual machine-learning pipeline.

Video 2. SPICA is an AI-powered system that allows BLV customers to interactively discover video content material

Through the growth of SPICA, the researchers used BLV video consumption research to align the system with person wants and preferences. The group performed a person research with 14 BLV contributors to guage usability and usefulness. The contributors discovered the system straightforward to make use of and efficient in offering extra data that improved their understanding and immersion in video content material.

Based on the researchers, the insights gained from the person research spotlight the potential for additional analysis, together with enhancing AI fashions for correct and contextually wealthy generated descriptions. Moreover, there’s potential for exploring utilizing haptic suggestions and different sensory channels to reinforce video consumption for BLV customers.

The group plans to pursue future analysis utilizing AI to assist BLV people with bodily duties of their day by day lives, seeing potential with current breakthroughs in massive generative fashions.

Study extra about SPICA.

Learn the analysis paper.

The AI for Good weblog collection showcases AI’s transformative energy in fixing urgent international challenges. Learn the way researchers and builders leverage groundbreaking expertise and launch progressive tasks utilizing AI to create optimistic change for folks and the planet.

This content material was partially crafted with the help of generative AI and LLMs. It underwent cautious evaluate by the researchers and was edited by the NVIDIA Technical Weblog group to make sure precision, accuracy, and high quality. Quotes are unique.