Delivered as optimized containers, NVIDIA NIM microservices are designed to speed up AI software improvement for companies of all sizes, paving the best way for speedy manufacturing and deployment of AI applied sciences. The set of microservices can be utilized to construct and deploy AI options throughout speech AI, information retrieval, digital biology, digital people, simulation, and huge language fashions (LLMs).

Delivered as optimized containers, NVIDIA NIM microservices are designed to speed up AI software improvement for companies of all sizes, paving the best way for speedy manufacturing and deployment of AI applied sciences. The set of microservices can be utilized to construct and deploy AI options throughout speech AI, information retrieval, digital biology, digital people, simulation, and huge language fashions (LLMs).

Every month, NVIDIA works to ship NIM microservices for main AI fashions throughout industries and domains. This put up affords a take a look at the most recent additions.

Speech and translation NIM microservices

The newest NIM microservices for speech and translation allow organizations to combine superior multilingual speech and translation capabilities into their world-wide conversational purposes. These embrace automated speech recognition (ASR), text-to-speech (TTS), and neural machine translation (NMT), catering to various business wants.

Parakeet ASR

The Parakeet ASR-CTC-1.1B-EnUS ASR mannequin, with 1.1 billion parameters, gives record-setting English language transcription capabilities. It delivers distinctive accuracy and robustness, adeptly dealing with various speech patterns and noise ranges. It permits companies to advance their voice-based companies, making certain superior person experiences.

FastPitch-HiFiGAN TTS

A TTS NIM, FastPitch-HiFiGAN-EN integrates FastPitch and HiFiGAN fashions to generate high-fidelity audio from textual content. It permits companies to create natural-sounding voices, elevating person engagement and delivering immersive experiences, setting a brand new normal in audio high quality.

Megatron NMT

A robust NMT mannequin, Megatron 1B-En32 excels in real-time translation throughout a number of languages, facilitating seamless multilingual communication. It permits organizations to increase their international attain, have interaction various audiences, and foster environment friendly worldwide collaboration.

By leveraging these superior speech and translation NIM microservices, enterprises can revolutionize their conversational AI purposes. From creating multilingual clever private assistants and model ambassadors to creating international customer support platforms, companies can innovate and improve person experiences throughout various languages and contexts.

Retrieval NIM microservices

The newest NVIDIA NeMo Retriever NIM microservices assist builders effectively fetch the very best proprietary information to generate educated responses for his or her AI purposes. NeMo Retriever permits organizations to seamlessly join customized fashions to various enterprise information and ship extremely correct responses for AI purposes utilizing retrieval-augmented era (RAG).

Embedding QA E5

The NVIDIA NeMo Retriever QA E5 embedding mannequin is optimized for textual content question-answering retrieval. An embedding mannequin is a vital part of a textual content retrieval system, because it transforms textual info into dense vector representations. They’re sometimes transformer decoders that course of tokens of enter textual content (for instance, query, passage) to output an embedding.

Embedding QA Mistral 7B

The NVIDIA NeMo Retriever QA Mistral 7B embedding mannequin is a well-liked multilingual neighborhood base mannequin fine-tuned for textual content embedding for high-accuracy question-answering. This embedding mannequin is most fitted for customers who wish to construct a question-and-answer software over a big textual content corpus, leveraging the most recent dense retrieval applied sciences.

Builders can obtain 2x improved throughput with the NeMo Retriever QA Mistral 7B NIM.

Snowflake Arctic Embed

Snowflake Arctic Embed is a collection of textual content embedding fashions for high-quality retrieval, optimized for efficiency. These fashions are prepared for business use, freed from cost. The Arctic Embed fashions have achieved state-of-the-art efficiency on the MTEB/BEIR leaderboard for every of their measurement variants.

Reranking QA Mistral 4B

The NVIDIA NeMo Retriever QA Mistral 4B reranking mannequin is optimized for offering a logit rating that represents how related a doc is to a given question. The rating mannequin is a part in a textual content retrieval system to enhance the general accuracy. A textual content retrieval system usually makes use of an embedding mannequin (dense) or lexical search (sparse) index to return related textual content passages given the enter.

A rating mannequin can be utilized to rerank the potential candidates right into a last order. A rating mannequin receives the question-passage pairs as an enter and may subsequently course of cross consideration between the phrases. It could not be possible to use a rating mannequin on all paperwork within the data base, so rating fashions are sometimes deployed together with embedding fashions.

Builders can obtain 1.75x improved throughput with the NeMo Retriever QA Mistral 4B reranking NIM.

Digital biology NIM microservices

Within the healthcare and life sciences sectors, NVIDIA NIM microservices are reworking digital biology. These superior AI instruments empower pharmaceutical corporations, biotechnology, and healthcare services with capabilities to expedite innovation and the supply of life-saving medication to sufferers.

MolMIM

MolMIM is a transformer-based mannequin for managed small molecule era. It could possibly optimize and pattern molecules from the latent area which have improved values of the specified scoring capabilities. This contains capabilities from different fashions and capabilities primarily based on experimental information testing for varied chemical and organic properties. Constructed on strong inference engines, the MolMIM NIM microservice will be deployed within the cloud or on-premises for enterprise-grade inference in computational drug discovery workflows, together with digital screening, lead optimization, and different lab-in-the-loop approaches.

DiffDock

NVIDIA DiffDock NIM microservice is constructed for high-performance, scalable molecular docking at enterprise scale. It requires protein and molecule 3D buildings as enter however doesn’t require any details about a binding pocket. Pushed by a generative AI mannequin and accelerated 3D equivariant graph neural networks, it will possibly predict as much as 7x extra poses per second in comparison with the baseline printed mannequin, decreasing the price of computational drug discovery workflows, together with digital screening and lead optimization.

These digital biology NIM microservices allow pharmaceutical corporations to streamline their drug improvement computational workflows, doubtlessly delivering life-saving remedies sooner at decrease R&D price.

LLM NIM microservices

LLMs proceed to be a cornerstone of AI innovation. New NVIDIA NIM microservices for LLMs provide unprecedented efficiency and accuracy throughout varied purposes and languages.

Llama 3.1 8B and 70B

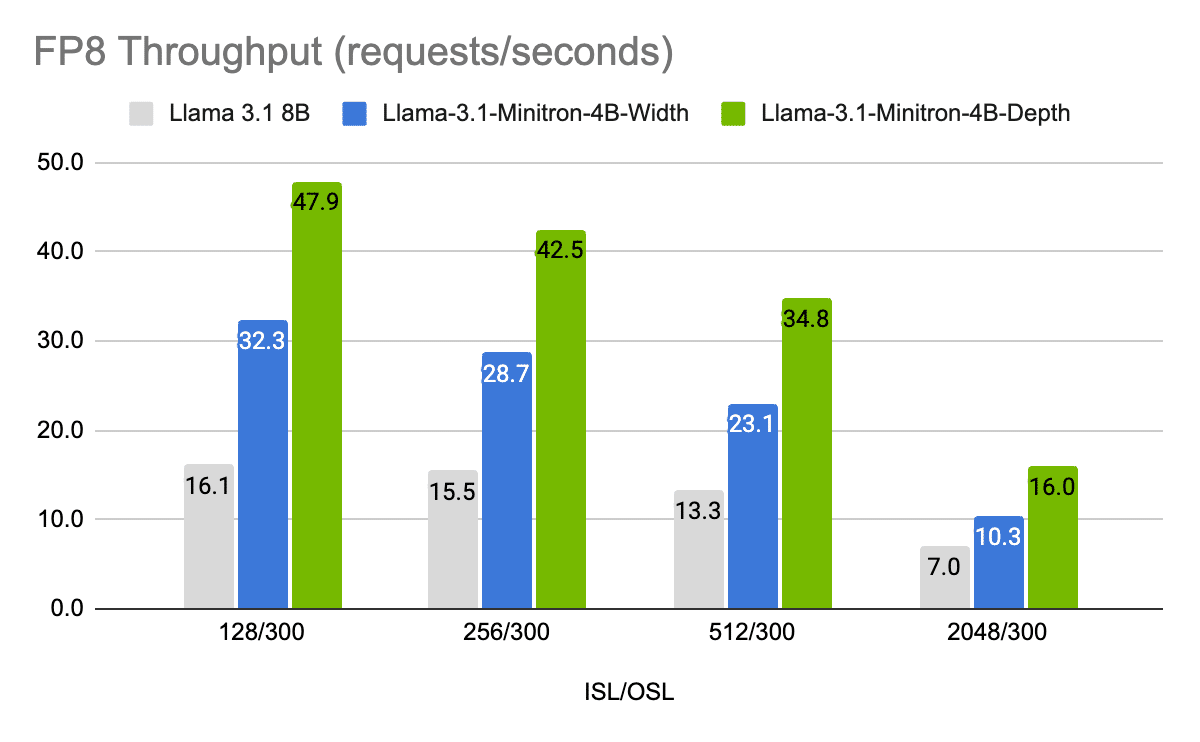

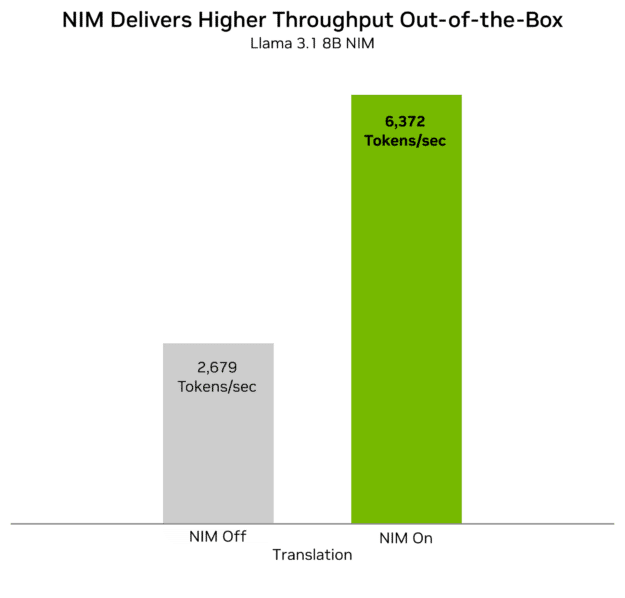

The Llama 3.1 8B and 70B fashions present cutting-edge textual content era and language understanding capabilities, serving as highly effective instruments for creating partaking and informative content material. When deploying Llama 3.1 8B NIM on NVIDIA H100 information middle GPUs, builders can obtain an out-of-the-box efficiency improve of as much as 2.5x tokens per second for content material era in comparison with deploying the mannequin with out NIM.

Determine 1. Llama 3.1 8B NIM reveals improved throughput for translation

Llama3.1 8B Instruct, 1 x H100 SXM; enter and output token lengths: 1,000. Concurrent shopper requests: 200. NIM on: BF16, TTFT: ~1s, ITL: ~30ms. NIM off: BF16, TTFT: ~4s, ITL: ~65ms

Llama 3.1 405B

Llama 3.1 405B is the most important overtly out there mannequin that can be utilized for all kinds of use circumstances. One key use case is artificial information era, serving to companies improve mannequin efficiency and develop their datasets. The Llama 3.1 405B NIM microservice will be downloaded and run anyplace in the present day from the NVIDIA API catalog.

Simulation NIM microservices

New NVIDIA USD NIM microservices provide the power to leverage generative AI copilots and brokers to develop Common Scene Description (OpenUSD) instruments that speed up the creation of 3D worlds.

The next microservices are actually out there to preview:

USD Code

USD Code is a state-of-the-art LLM that solutions OpenUSD data queries and generates USD-Python code.

USD Search

USD Search gives AI-powered seek for OpenUSD information, 3D fashions, pictures, and property utilizing text- or image-based inputs.

USD Validate

USD Validate permits verifying compatibility of OpenUSD property with instantaneous RTX render and rule-based validation.

With these new USD NIM microservices, extra industries will be capable of develop purposes for visualizing industrial design and engineering tasks, or to simulate environments to construct the subsequent wave of bodily AI and robots.

Video conferencing NIM microservices

NVIDIA Maxine simplifies the deployment for AI options that improve audio, video, and augmented actuality results for video conferencing and telepresence.

Maxine Audio2Face-2D

Maxine Audio2Face-2D, now out there within the API catalog, animates a 2D picture in actual time, utilizing speech audio solely. Speech alerts are interpreted to corresponding facial animation within the portrait photograph to provide an H.264 compressed output video. It additionally permits head pose animation for pure supply and will be coupled with a chatbot output or translated speech. A standard use case is digital brokers. You possibly can start prototyping with Audio2Face-2D by means of the API catalog in the present day.

Eye contact

Eye contact performs a key function in establishing social connections, and in face-to-face conversations it signifies confidence, connection, and a focus. To enhance, increase, and improve the person expertise, NVIDIA has developed NVIDIA Maxine Eye Contact NIM microservice. This characteristic makes use of AI to use a filter to the person’s webcam feed in actual time and redirects their eye gaze towards the digital camera.

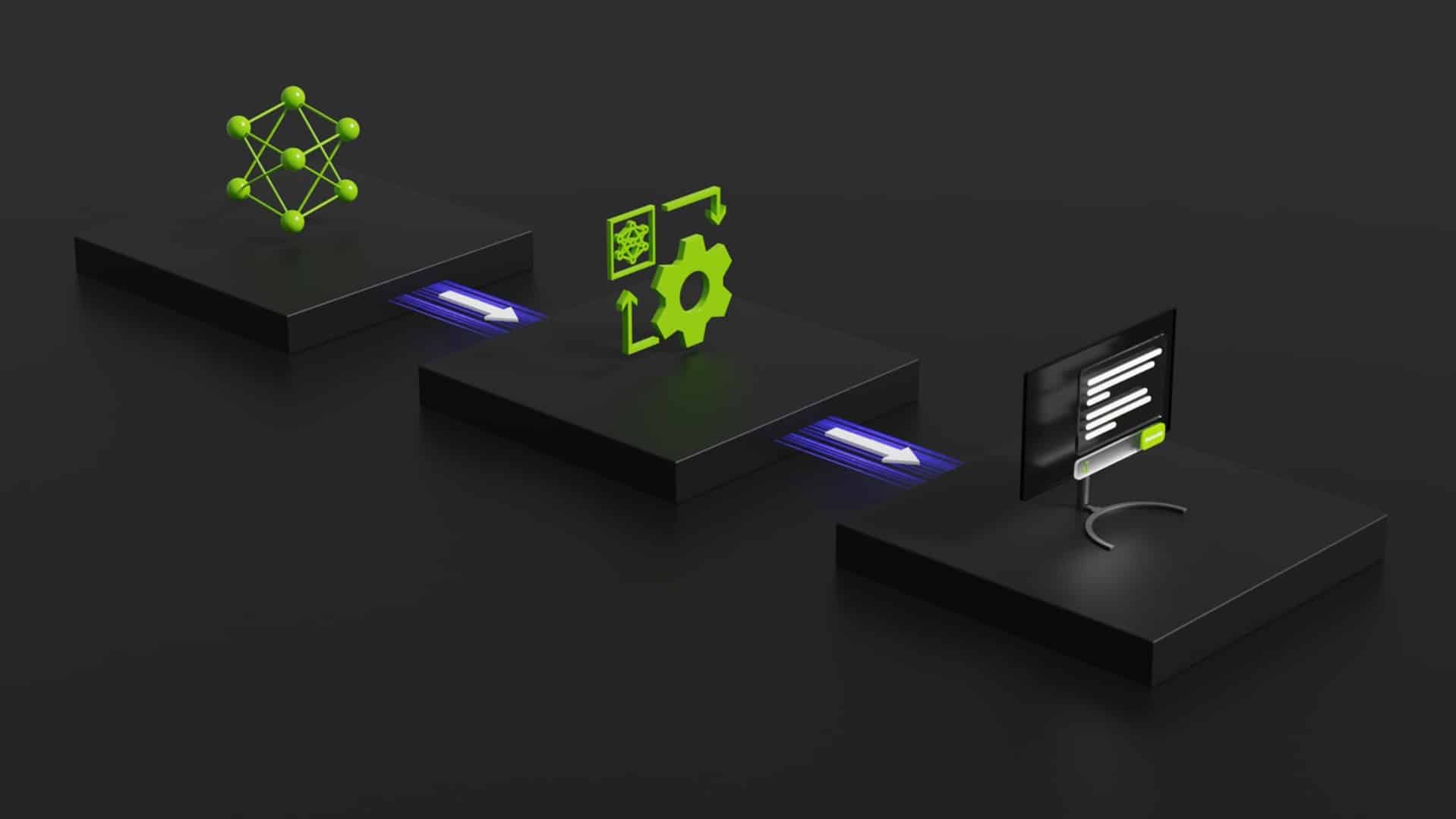

Speed up AI software improvement

NVIDIA NIM streamlines the creation of complicated AI purposes by enabling the combination of specialised microservices throughout domains. Utilizing NIM microservices, organizations can bypass the complexities of constructing AI fashions from scratch, saving time and assets. This frees groups to concentrate on integrating these pre-trained fashions into their workflows, accelerating operational transformation. The modular nature of NIM microservices permits for the meeting of custom-made AI options that meet particular enterprise wants.

For instance, an organization can mix ACE NIM microservices, together with speech recognition, with LLM NIM microservices to create digital people for personalised customer support throughout industries corresponding to healthcare, finance, and retail.

Video 1. Find out how digital people can remodel industries

NIM microservices can be built-in into provide chain administration programs, combining cuOpt NIM microservice for route optimization with NeMo Retriever NIM microservices for retrieval-augmented era (RAG) and LLM NIM microservices so enterprise can speak to their provide chain.

Video 2. Reply to produce chain modifications in seconds utilizing NIM microservices

Get began

NVIDIA NIM empowers enterprises to completely harness AI, accelerating innovation, sustaining a aggressive edge, and delivering superior buyer experiences. Discover the most recent AI fashions out there with NIM microservices and uncover how these highly effective instruments can remodel your small business.