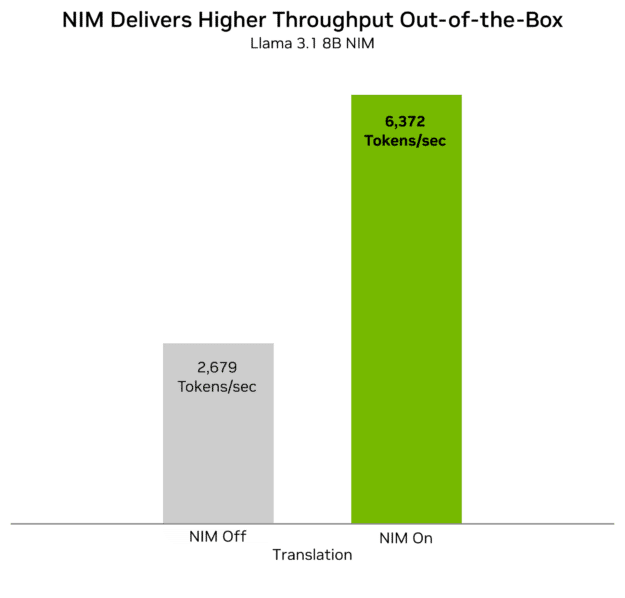

NVIDIA NIM, a part of NVIDIA AI Enterprise, now helps tool-calling for fashions like Llama 3.1. It additionally integrates with LangChain to give you a production-ready resolution for constructing agentic workflows. NIM microservices present the most effective efficiency for open-source fashions comparable to Llama 3.1 and can be found to check at no cost from NVIDIA API Catalog in LangChain purposes.

NVIDIA NIM, a part of NVIDIA AI Enterprise, now helps tool-calling for fashions like Llama 3.1. It additionally integrates with LangChain to give you a production-ready resolution for constructing agentic workflows. NIM microservices present the most effective efficiency for open-source fashions comparable to Llama 3.1 and can be found to check at no cost from NVIDIA API Catalog in LangChain purposes.

Constructing AI brokers with NVIDIA NIM

The Llama 3.1 NIM microservice lets you construct generative AI purposes with superior performance for manufacturing deployments. You should use an accelerated open mannequin with state-of-the-art agentic capabilities to construct extra refined and dependable purposes. For extra data, see Supercharging Llama 3.1 throughout NVIDIA Platforms.

NIM offers an OpenAI appropriate software calling API for familiarity and consistency. Now, you’ll be able to bind instruments with LangChain to NIM microservices to create structured outputs that deliver agent capabilities to your purposes.

Software utilization with NIM

Instruments settle for structured output from a mannequin, execute an motion, and return leads to a structured format again to the mannequin. They typically contain exterior API calls, however this isn’t necessary.

For example, a climate software may get the present climate in San Diego, whereas an internet search software may get the present San Francisco 49ers soccer recreation rating.

To help software utilization in an agent workflow, first, a mannequin have to be educated to detect when to name a operate and output a structured response like JSON with the operate and its arguments. The mannequin is then optimized as a NIM microservice for NVIDIA infrastructure and straightforward deployment, making it appropriate with frameworks like LangChain’s LangGraph.

Use LangChain to develop LLM purposes with instruments

Right here’s how you can use LangChain with fashions like Llama 3.1 that help software calling. For extra details about putting in packages and organising the ChatNVIDIA library, see the LangChain NVIDIA documentation.

To get an inventory of fashions that help software calling, run the next command:

from langchain_nvidia_ai_endpoints import ChatNVIDIA tool_models = [model for model in ChatNVIDIA.get_available_models() if model.supports_tools]

You may create your individual capabilities or instruments and bind them to fashions utilizing LangChain’s bind_tools operate.

from langchain_core.pydantic_v1 import Discipline

from langchain_core.instruments import software

@software

def get_current_weather(

location: str = Discipline(..., description="The situation to get the climate for.")

):

"""Get the present climate for a location."""

...

llm = ChatNVIDIA(mannequin=tool_models[0].id).bind_tools(instruments=[get_current_weather])

response = llm.invoke("What's the climate in Boston?")

response.tool_calls

You should use one thing like Tavily API for generic search or the Nationwide Climate Service API within the get_current_weather operate.

Discover extra assets

The earlier code instance is only a small instance that exhibits how fashions can help instruments. LangChain’s LangGraph integrates with NIM microservices, as proven within the NVIDIA NIMs with Software Calling for Brokers instance on GitHub.

Take a look at different LangGraph examples to construct stateful, multi-actor purposes with NIM microservices to be used instances comparable to buyer help, coding assistants, and superior RAG, analysis.

Superior RAG might be constructed with LangGraph and NVIDIA NeMo Retriever in an agent workflow that makes use of methods comparable to self-RAG and corrective RAG. For extra data, see Construct an Agentic RAG Pipeline with Llama 3.1 and NVIDIA NeMo Retriever NIM Microservices and the related pocket book.

Get began on constructing your purposes with NVIDIA NIM microservices!