Creating a high-performing Hebrew massive language mannequin (LLM) presents distinct challenges stemming from the wealthy and sophisticated nature of the Hebrew language itself. The intricate construction of Hebrew, with phrases fashioned via root and sample mixtures, calls for subtle modeling approaches. Furthermore, the shortage of capitalization and the frequent absence of punctuation like durations and commas in Hebrew textual content poses difficulties for tokenizers in correctly segmenting sentences.

Creating a high-performing Hebrew massive language mannequin (LLM) presents distinct challenges stemming from the wealthy and sophisticated nature of the Hebrew language itself. The intricate construction of Hebrew, with phrases fashioned via root and sample mixtures, calls for subtle modeling approaches. Furthermore, the shortage of capitalization and the frequent absence of punctuation like durations and commas in Hebrew textual content poses difficulties for tokenizers in correctly segmenting sentences.

For instance, the phrase הקפה may imply “the espresso” or “encircle,” relying on the pronunciation. The versatile phrase order allowable in Hebrew syntax provides one other layer of complexity. Compounding these points is the excessive diploma of morphological ambiguity, the place a single phrase can point out a number of meanings, relying on the context. As well as, the Hebrew language avoids diacritical marks that convey vowel sounds, which additional complicates correct textual content processing and understanding.

Overcoming these distinctive linguistic hurdles is essential for coaching an AI mannequin able to actually comprehending and producing high-quality Hebrew textual content. The DictaLM-2.0 suite of Hebrew-specific LLMs was skilled on classical and fashionable Hebrew texts, and has lately led the Hugging Face Open Leaderboard for Hebrew LLMs.

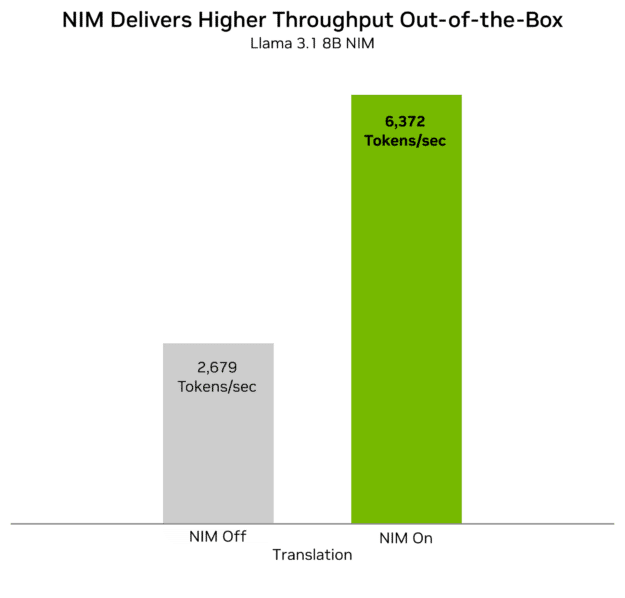

This submit explains learn how to use NVIDIA TensorRT-LLM and NVIDIA Triton Inference Server to optimize and speed up inference deployment of this mannequin at scale. TensorRT-LLM is a complete open-source library for compiling and optimizing LLMs for inference on NVIDIA GPUs. Triton Inference Server is an open-source platform that streamlines and accelerates the deployment of AI inference workloads to create production-ready deployment of LLMs.

What’s a low-resource language?

Within the context of conversational AI, low-resource languages are these with out massive quantities of knowledge accessible for coaching. Whereas this submit focuses on Hebrew, the identical challenges are prevalent when coping with low-resource languages on the whole, together with the languages of Southeast Asia. LLMs resembling SEA-LION and SeaLLM tackle these challenges by coaching on particular knowledge that higher represents the regional cultures and languages. Each of those LLMs can be found as NVIDIA NIM microservices which might be at the moment accessible for prototyping within the NVIDIA API catalog.

The vast majority of LLMs are primarily skilled on English textual content corpora, resulting in an inherent bias in the direction of Western linguistic patterns and cultural norms. This leads to LLMs struggling to precisely seize the nuances, idioms, and cultural contexts particular to non-Western languages and societies.

Moreover, the shortage of high-quality digitized textual content knowledge for a lot of non-Western languages exacerbates the useful resource shortage problem, making it tough for LLMs to study and generalize successfully throughout these languages. Consequently, LLMs usually fail to mirror the culturally applicable expressions, emotional connotations, and contextual subtleties inherent in non-Western languages, resulting in potential misinterpretations or biased outputs.

Modern LLMs additionally depend on statistically-driven tokenization strategies. As a result of underrepresentation of low-resource languages in coaching datasets, these tokenizers usually have a restricted set of tokens for every of those languages. This leads to poor compression effectivity for these languages. As a consequence, producing textual content in these languages turns into more difficult, and producing prolonged content material requires considerably extra computational sources and complexity.

Optimization workflow

For the primary optimization use case, we targeted on DictaLM 2.0 Instruct, a mannequin regularly pre-trained on Mistral 7B with a customized tokenizer skilled for Hebrew, after which additional aligned for chat functions.

git clone https://huggingface.co/dicta-il/dictalm2.0-instruct

Arrange TensorRT-LLM

To start, clone the most recent model of TensorRT-LLM. TensorRT-LLM incorporates many superior optimizations we’ll use on this instance.

git lfs set up git clone -b v0.11.0 https://github.com/NVIDIA/TensorRT-LLM.git cd TensorRT-LLM

Pull the Triton container

Subsequent, pull the Triton Inference Server container with TensorRT-LLM backend:

docker pull nvcr.io/nvidia/tritonserver:24.07-trtllm-python-py3

docker run --rm --runtime=nvidia --gpus all --volume

${PWD}/../dictalm2.0-instruct:/dictalm-2-instruct --volume

${PWD}:/TensorRT-LLM --entrypoint /bin/bash -it --workdir /TensorRT-LLM

nvcr.io/nvidia/tritonserver:24.07-trtllm-python-py3

Create the FP16 TensorRT-LLM engine

Convert the Hugging Face checkpoint to TensorRT-LLM format:

python examples/llama/convert_checkpoint.py --model_dir /dictalm-2-instruct/

--output_dir fp16_mistral/ --tp_size 1 --dtype float16

Then construct the optimized engine:

trtllm-build --checkpoint_dir fp16_mistral/ --output_dir

fp16_mistral_engine/ --max_batch_size 64 --max_output_len 1024

--paged_kv_cache allow

Quantize to INT4 and create the environment friendly TensorRT-LLM engine

To profit from the extra environment friendly INT4 numeric weight illustration, saving important reminiscence bandwidth and capability, carry out post-training quantization (PTQ). PTQ requires a consultant small dataset to replace the weights whereas sustaining statistical similarity. The offered script will pull an English calibration dataset, however you possibly can additionally replace the script to tug and use knowledge out of your goal language. TensorRT-LLM performs the quantization whereas changing to the TensorRT-LLM format.

PTQ will allow the mannequin to acquire comparable outcomes to the FP16 mannequin. It’s anticipated that, even after PTQ, the LLM will show some degree of lower in accuracy. Although that is out of scope, it needs to be talked about that to beat any efficiency lower you may look into quantization conscious coaching, or practice with FP8 or FP4 utilizing an NVIDIA transformer engine together with newer NVIDIA H100 and NVIDIA B200 GPUs.

Obtain the Dicta calibration dataset consisting of a mixture of Hebrew and English tokens. It will considerably enhance INT4 accuracy, compared to utilizing a default English calibration dataset.

git clone

https://huggingface.co/datasets/dicta-il/dictalm2.0-quant-calib-dataset

Quantize to INT4 utilizing the calibration dataset:

python3 examples/quantization/quantize.py --kv_cache_dtype fp8 --dtype

float16 --qformat int4_awq --output_dir ./quantized_mistral_int4

--model_dir /dictalm-2-instruct --calib_size 32

Then construct the engine:

trtllm-build --checkpoint_dir quantized_mistral_int4/ --output_dir

quantized_mistral_int4_engine/ --max_batch_size 64 --max_output_len 1024

--weight_only_precision int4 --gemm_plugin float16 --paged_kv_cache allow

Deploy the mannequin with Triton Inference Server

After the engine is constructed, you may deploy the mannequin with Triton Inference Server. It will assist scale back setup and deployment time. The Triton Inference Server backend for TensorRT-LLM leverages the TensorRT-LLM C++ runtime for fast inference execution and consists of strategies like in-flight batching and paged KV caching. You’ll be able to entry Triton Inference Server with the TensorRT-LLM backend as a prebuilt container via the NVIDIA NGC catalog.

First, arrange TensorRT-LLM backend:

git clone -b v0.11.0

https://github.com/triton-inference-server/tensorrtllm_backend.git

cd tensorrtllm_backend

cp ../TensorRT-LLM/fp16_mistral_engine/*

all_models/inflight_batcher_llm/tensorrt_llm/1/

Coping with personalized tokenizers requires adopting the workaround workflow. Within the case of low-resource languages, tokenizers usually characteristic totally different vocabularies, distinctive token mapping, and so forth.

First, arrange the tokenizer directories:

HF_MODEL=/dictalm-2-instruct

ENGINE_PATH=tensorrtllm_backend/all_models/inflight_batcher_llm/tensorrt_llm/1

python3 instruments/fill_template.py -i

all_models/inflight_batcher_llm/preprocessing/config.pbtxt

tokenizer_dir:${HF_MODEL},tokenizer_type:auto,triton_max_batch_size:32,preprocessing_instance_count:1

python3 instruments/fill_template.py -i

all_models/inflight_batcher_llm/postprocessing/config.pbtxt

tokenizer_dir:${HF_MODEL},tokenizer_type:auto,triton_max_batch_size:32,postprocessing_instance_count:1

python3 instruments/fill_template.py -i

all_models/inflight_batcher_llm/ensemble/config.pbtxt

triton_max_batch_size:32

python3 instruments/fill_template.py -i

all_models/inflight_batcher_llm/tensorrt_llm/config.pbtxt

triton_backend:tensorrtllm,triton_max_batch_size:32,decoupled_mode:True,m

ax_beam_width:1,engine_dir:${ENGINE_PATH},max_tokens_in_paged_kv_cache:40

96,max_attention_window_size:4096,kv_cache_free_gpu_mem_fraction:0.5,excl

ude_input_in_output:True,enable_kv_cache_reuse:False,batching_strategy:in

flight_fused_batching,max_queue_delay_microseconds:0

rm -r all_models/inflight_batcher_llm/tensorrt_llm_bls

Then launch with Triton Inference Server:

docker run --rm -it

-p8000:8000 -p8001:8001 -p8002:8002

--gpus 0

--name triton_trtllm_server

-v $(pwd)/dictalm2.0-instruct:/dictalm-2-instruct

-v $(pwd):/workspace

-w /workspace

nvcr.io/nvidia/tritonserver:24.07-trtllm-python-py3 tritonserver

--model-repository=/workspace/tensorrtllm_backend/all_models/inflight_bat

cher_llm --model-control-mode=NONE --log-verbose=0

Inference with Triton Inference Server

To ship requests to and work together with the operating server, you should use one of many Triton shopper libraries or ship HTTP requests to the generated endpoint.

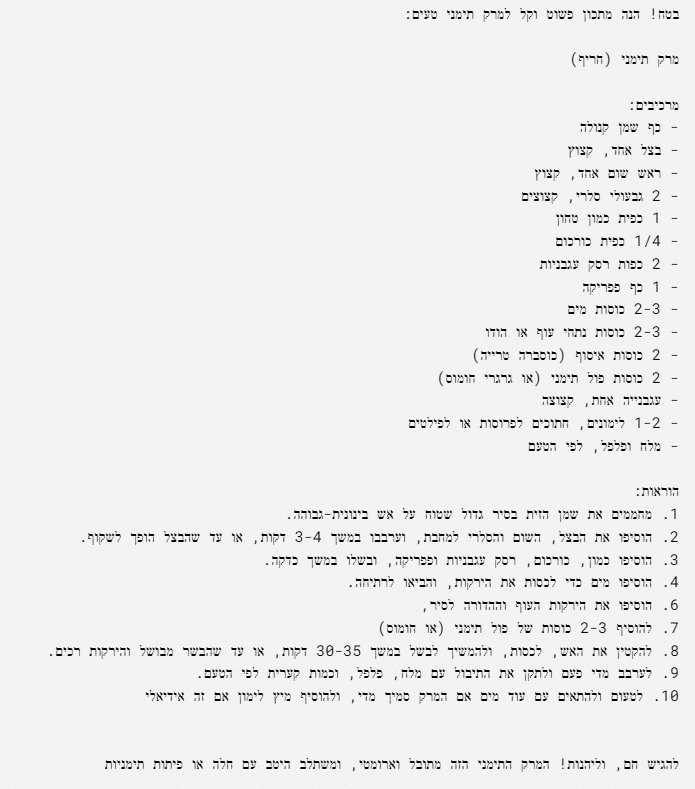

To get began with a easy request, use the next curl command to ship HTTP requests to the generated endpoint. We particularly ask a difficult query, requiring each detailed data in addition to cultural context: “Do you have got recipes for Yemenite soup?”

curl -X POST localhost:8000/v2/fashions/ensemble/generate

-d

'{

"text_input": "[INST]האם יש לך מתכונים למרק תימני?[/INST]",

"parameters": {

"max_tokens": 1000,

"bad_words":[""],

"stop_words":[""]

}

}'

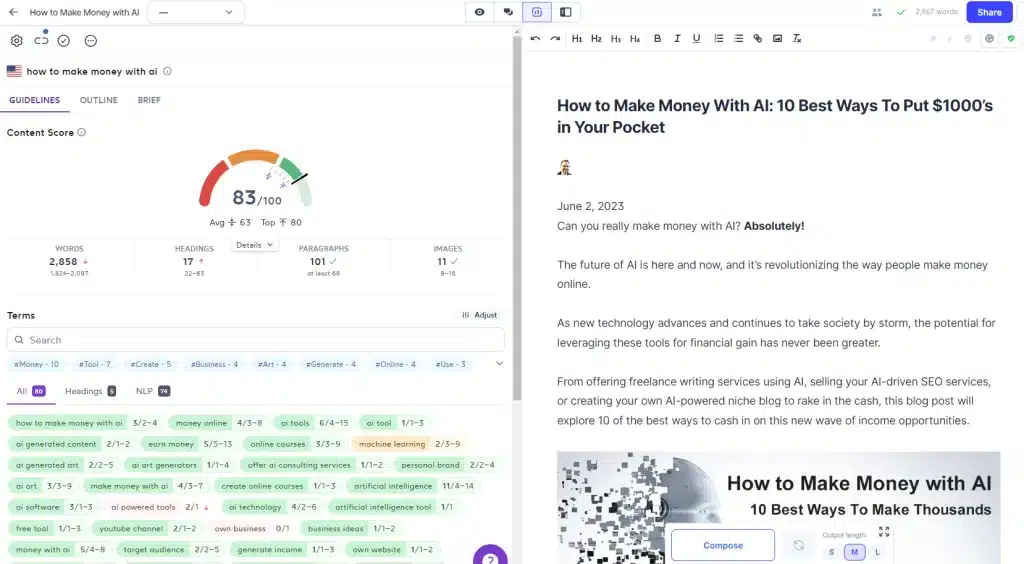

The LLM generates an in depth response with an in depth recipe. It provides cultural context by noting when this dish is often served, in addition to a number of variations (Determine 1).

Determine 1. LLM-generated Yemenite soup recipe in Hebrew, together with cultural context

Efficiency outcomes

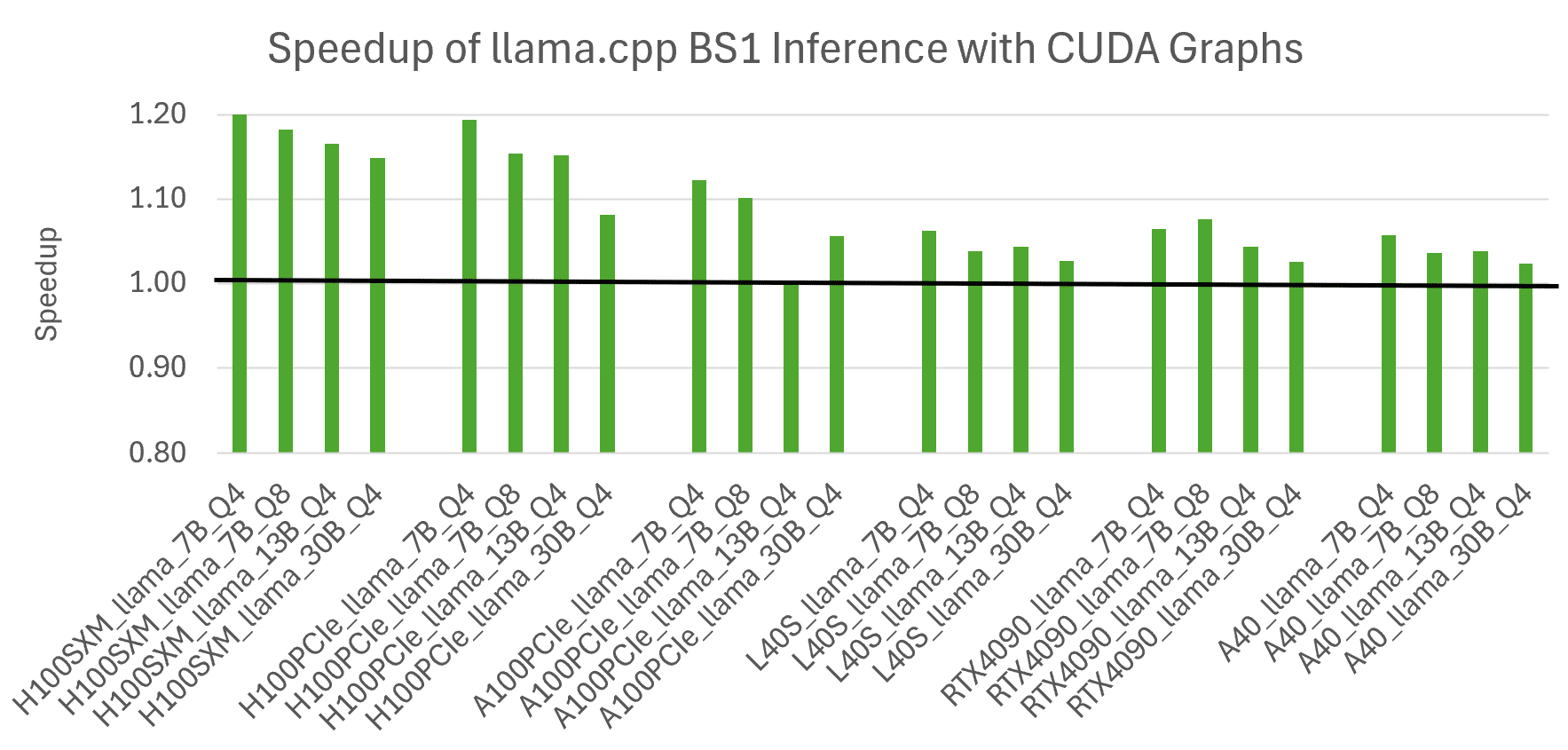

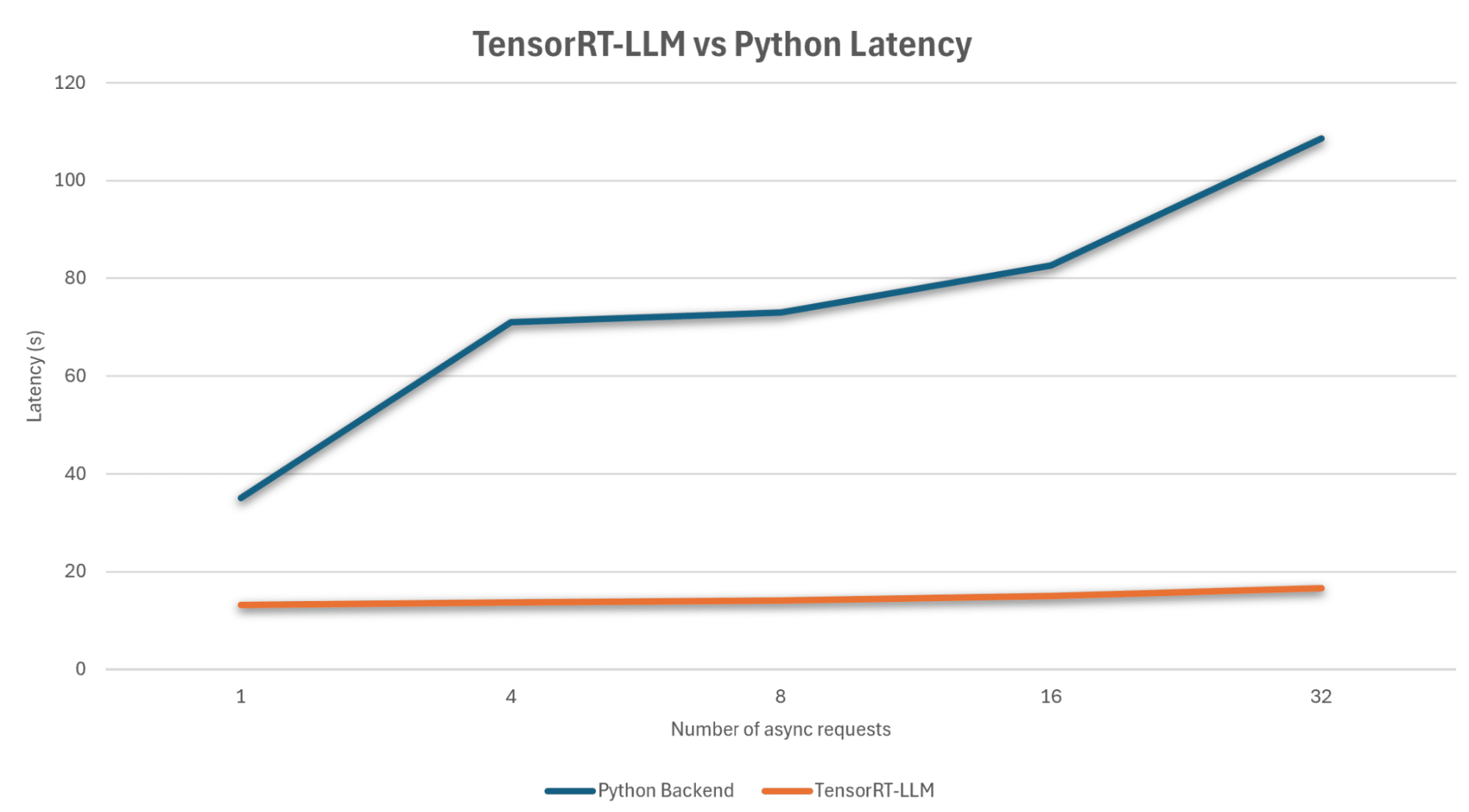

For efficiency experiments and measurement, we ran the mannequin with totally different acceleration configurations on a single NVIDIA A100 GPU. Determine 2 exhibits the latency to finish totally different numbers of async requests of 1024 output tokens, evaluating the baseline Python backend (blue line) to Tensor-RT LLM (pink line). The non-accelerated Python backend grows in latency because the variety of requests will increase, whereas TensorRT-LLM gives very efficient scaling all through.

Determine 2. Efficiency graph exhibiting the time to finish N async requests

Conclusion

With baseline help for a lot of standard LLM architectures, TensorRT-LLM makes it straightforward to deploy, experiment, and optimize with quite a lot of LLMs. Collectively, TensorRT-LLM and Triton Inference Server present an integrative toolkit for optimizing, deploying, and operating LLMs effectively.

To get began, go to NVIDIA/TensorRT-LLM on GitHub to obtain and arrange the TensorRT-LLM open-source library, and experiment with different multi-language LLMs, resembling Baichuan-7B.