Whereas generative AI can be utilized to create intelligent rhymes, cool photos, and soothing voices, a more in-depth take a look at the methods behind these spectacular content material turbines reveals probabilistic learners, compression instruments, and sequence modelers. When utilized to quantitative finance, these strategies can assist disentangle and study complicated associations in monetary markets.

Whereas generative AI can be utilized to create intelligent rhymes, cool photos, and soothing voices, a more in-depth take a look at the methods behind these spectacular content material turbines reveals probabilistic learners, compression instruments, and sequence modelers. When utilized to quantitative finance, these strategies can assist disentangle and study complicated associations in monetary markets.

Market situations are essential for threat administration, technique backtesting, portfolio optimization, and regulatory compliance. These hypothetical information fashions signify potential future market situations, serving to monetary establishments to simulate and assess outcomes and make knowledgeable funding selections.

Particular strategies show proficiency in varied areas, corresponding to:

- Information era with variational autoencoders or denoising diffusion fashions

- Modeling sequences with intricate dependencies utilizing transformer-based generative fashions

- Understanding and predicting time-series dynamics with state-space fashions

Whereas these strategies might function in distinct methods, they may also be mixed to yield highly effective outcomes.

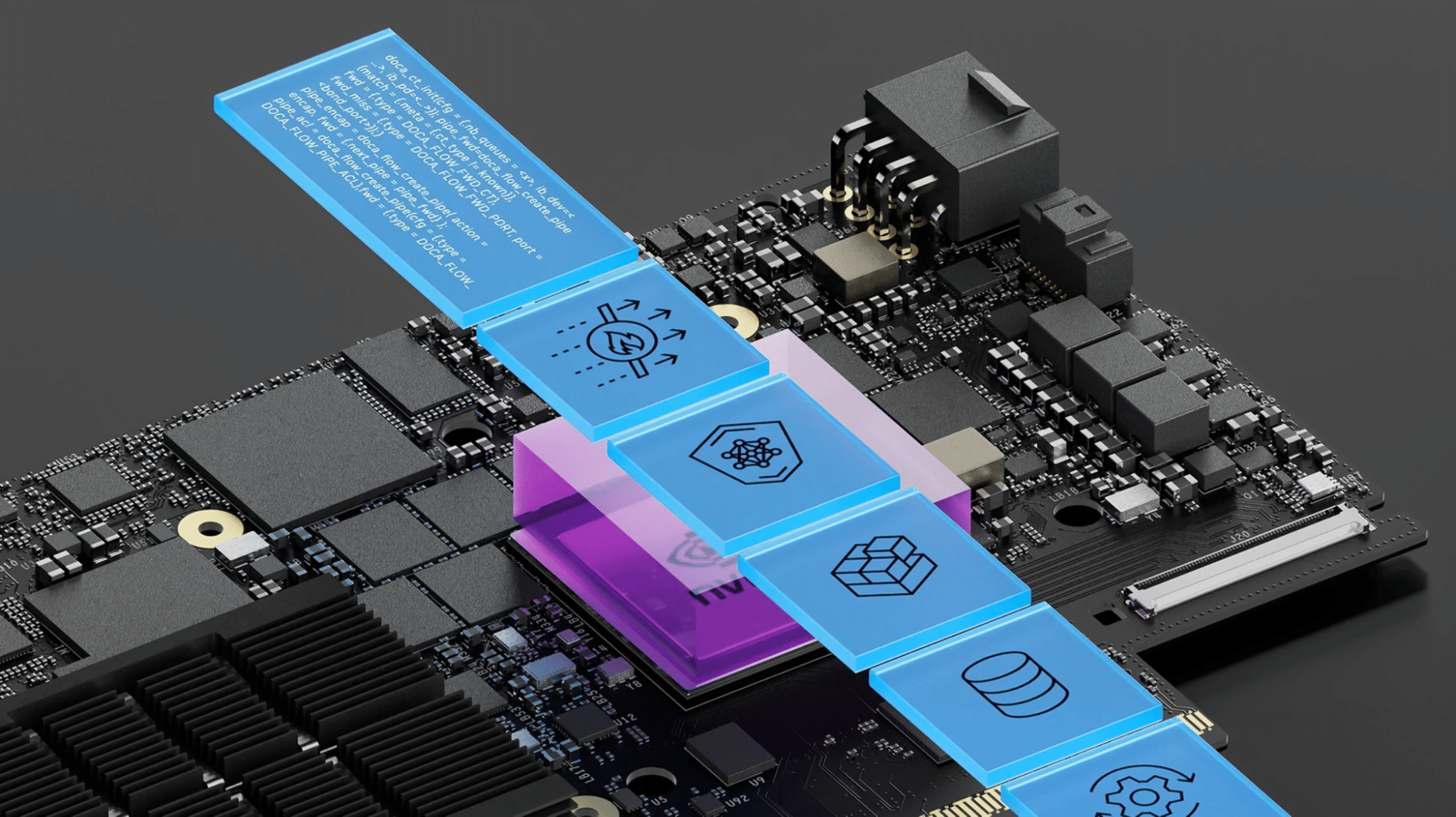

This submit explores how variational autoencoders (VAE), denoising diffusion fashions (DDM), and different generative instruments will be built-in with giant language fashions (LLM) to effectively create market situations with desired properties. It showcases a state of affairs era reference structure powered by NVIDIA NIM, a set of microservices designed to speed up the deployment of generative fashions.

One toolset, many purposes

Generative AI offers a unified framework round quite a lot of quantitative finance issues which have beforehand been addressed with distinct approaches. As soon as a mannequin has been skilled to study the distribution of its enter information, it may be used as a foundational mannequin for quite a lot of duties.

As an illustration, it may generate samples for the creation of simulations or threat situations. It may additionally pinpoint which samples are out of distribution, appearing as an outlier detector or stress state of affairs generator. As market information strikes at completely different frequencies, cross-sectional market snapshots have gaps. A generative mannequin can provide the lacking information that matches with the precise information in a believable method, which is helpful for nowcasting fashions or coping with illiquid factors. Lastly, autoregressive next-token prediction and state house fashions can assist with forecasting.

A big bottleneck for area consultants leveraging such generative fashions is the shortage of platform assist that bridges their concepts and intentions with the complicated infrastructure wanted to deploy these fashions. Whereas LLMs have gained mainstream use throughout varied industries together with finance, they’re primarily used for information processing duties, corresponding to Q&A and summarization, or coding duties, like producing code stubs for additional enhancement by human builders and integration into proprietary libraries.

Integrating LLMs with complicated fashions can bridge the communication hole between quantitative consultants and generative AI fashions, as defined beneath.

Market state of affairs era

Historically, the era of market situations has relied on methods together with knowledgeable specs (“shift the US yield curve up by  bp in parallel”), issue decompositions (“bump the EUR swap curve by

bp in parallel”), issue decompositions (“bump the EUR swap curve by  bp alongside the primary PCA course”), and statistical strategies corresponding to variance-covariance or bootstrapping. Whereas these methods assist produce new situations, they lack a full image of the underlying information distribution and infrequently require guide adjustment. Generative approaches, which study information distributions implicitly, elegantly overcome this modeling bottleneck.

bp alongside the primary PCA course”), and statistical strategies corresponding to variance-covariance or bootstrapping. Whereas these methods assist produce new situations, they lack a full image of the underlying information distribution and infrequently require guide adjustment. Generative approaches, which study information distributions implicitly, elegantly overcome this modeling bottleneck.

LLMs will be mixed with state of affairs era fashions in highly effective methods to allow simplified interplay whereas additionally appearing as pure language consumer interfaces for market information exploration. For instance, a dealer would possibly want to assess her e book’s publicity if markets had been to behave like they did throughout a earlier occasion, corresponding to the good monetary disaster, the final U.S. election, the Flash Crash, or the dot-com bubble burst. An LLM skilled on recorded information of such occasions may discover and extract the traits of curiosity conditional on such occasions or historic intervals and cross them to a generative market mannequin to create related market situations to be used in downstream purposes.

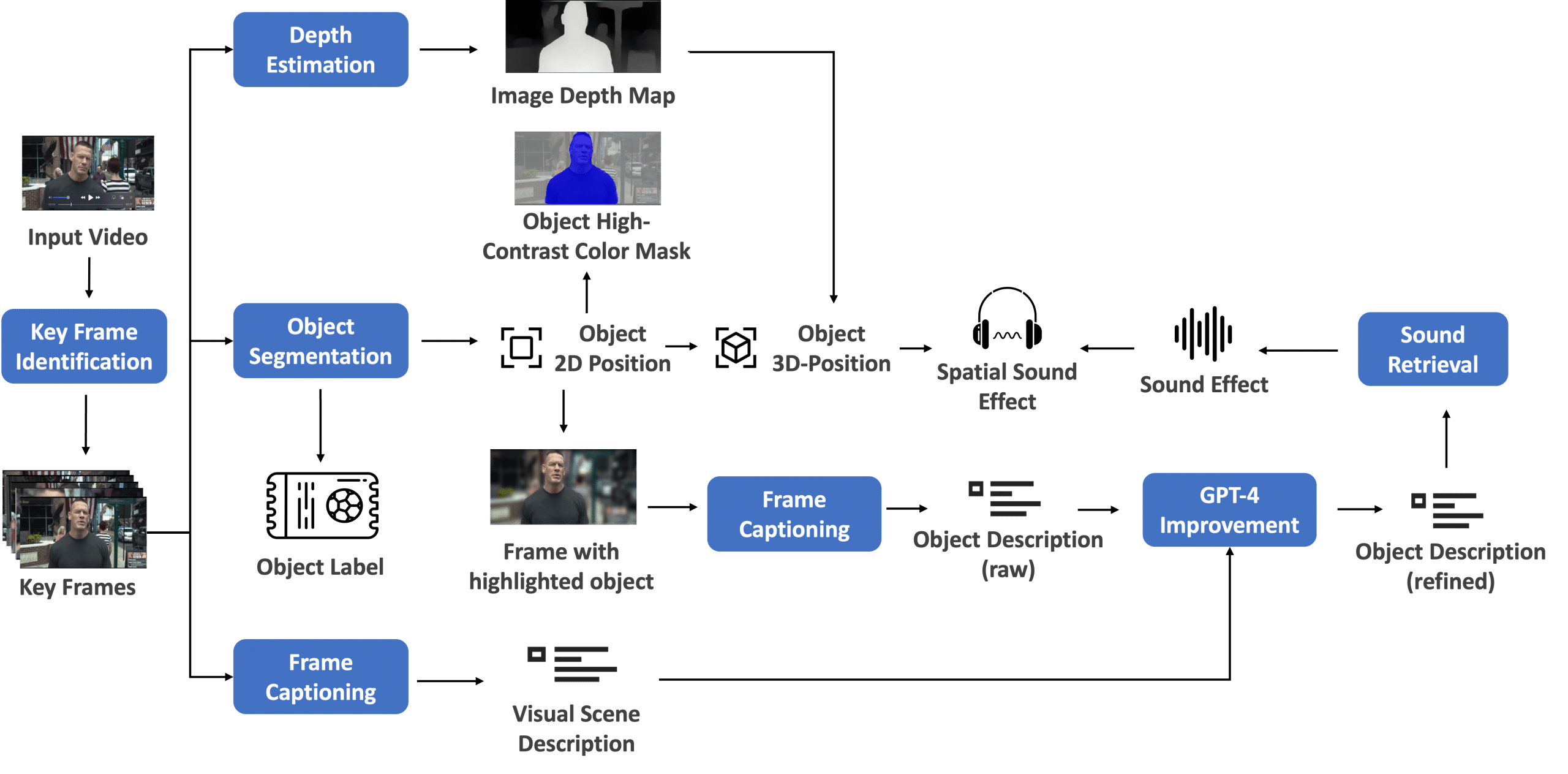

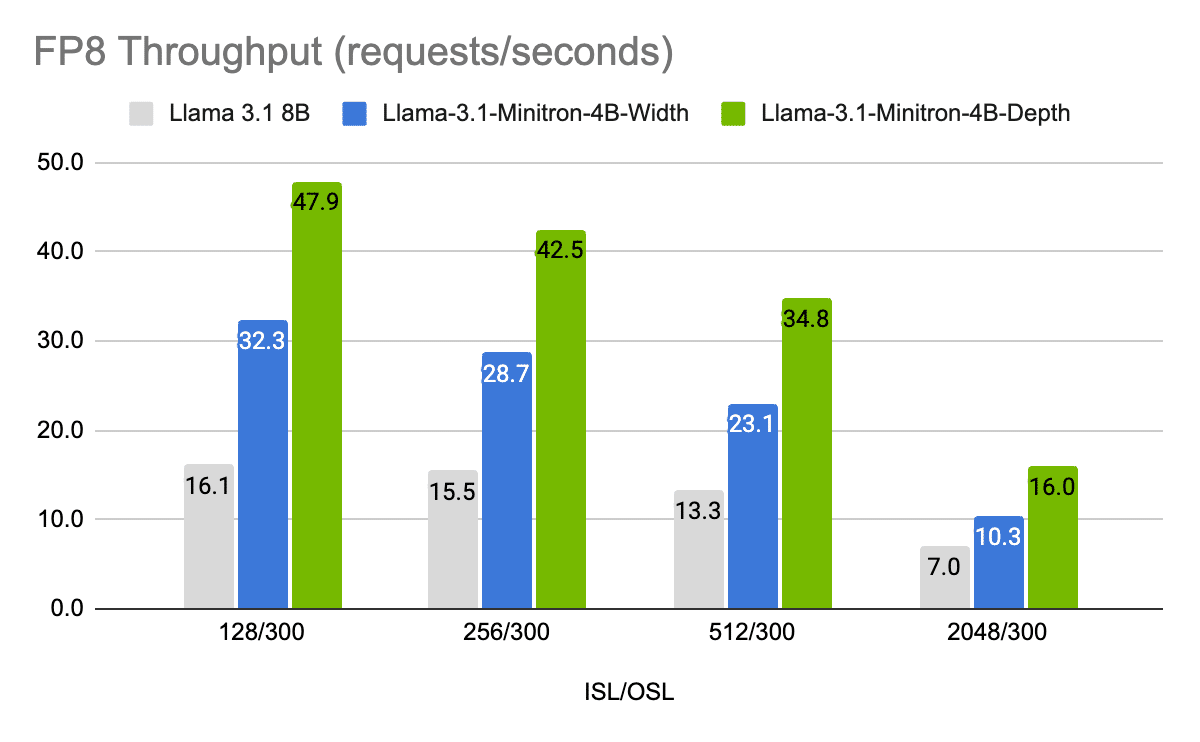

Determine 1 illustrates reference structure for market state of affairs era, connecting consumer specs with appropriate generative instruments. Pattern code for an implementation powered by NVIDIA NIM is proven within the Pattern Implementation part.

The method begins with a consumer instruction; for instance, requesting a simulation of an rate of interest atmosphere just like the one “on the peak of the monetary disaster.” An agent (or assortment of brokers) processes this request by first routing it to an LLM-powered interpreter that interprets and converts the request in pure language to an intermediate format (on this case, a JSON file).

The LLM then interprets the “peak of economic disaster” to a concrete historic interval (September 15 to October 15, 2008) and maps the market objects of curiosity (U.S. swap curves and swaption volatility surfaces, for instance) to their respective pre-trained generative fashions (VAE, DDM, and so forth). Details about the historic interval of curiosity will be retrieved by way of a information retriever part and handed on to the corresponding generative instruments to generate related market information.

Determine 1. Market state of affairs generator reference structure utilizing NIM microservices

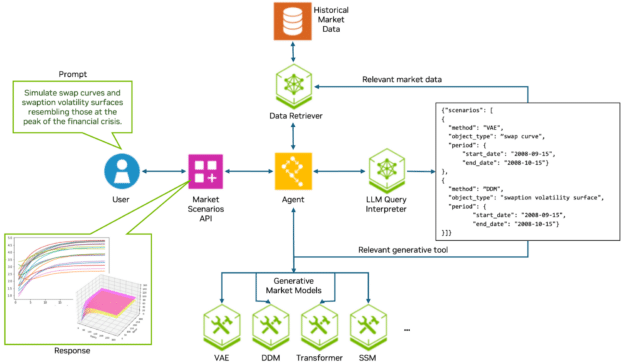

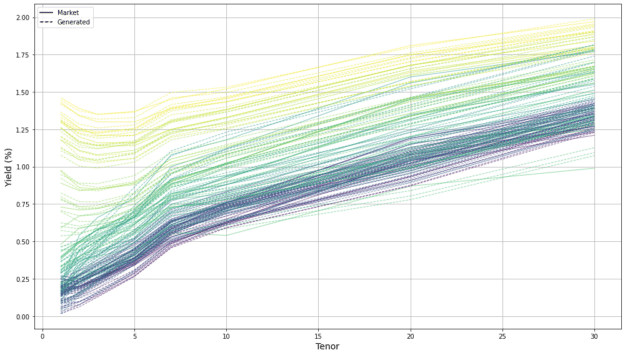

The era course of entails operating inference on generative fashions which were pre-trained on market information. Figures 2 and three illustrate an instance of yield curve situations generated utilizing a VAE mannequin equivalent to the beginning of the COVID-19 pandemic.

Determine 2. Latent house projections of historic U.S. yield curves in the beginning of the COVID-19 pandemic, late February to mid-April 2020 (left), and situations added by sampling within the latent house within the neighborhoods of realized yield curves (proper)

Determine 3. Historic and synthetically generated yield curve situations for the beginning of the COVID-19 pandemic

Desk 1 exemplifies extra market state of affairs requests together with corresponding JSON outputs. On this case, the consumer question signifies the mannequin for use for illustration functions. In a manufacturing atmosphere, a routing agent may mechanically choose the correct mannequin.

Question

Reply

Mannequin & Feedback “Utilizing a VAE mannequin, output yield curves just like these within the second half of 2020.” {“situations”: [

{

“method”: “VAE”,

“object_type”: “yield curve”,

“period”: {

“start_date”: “2020-07-01”,

“end_date”: “2020-12-31”}

}

]} llama3-70b-instruct

Appropriately finds the second half of 2020.

“Generate yield curves just like these within the yr post-COVID.” {“situations”: [

{

“method”: “VAE”,

“object_type”: “yield curve”,

“period”: {

“start_date”: “2020-03-01”,

“end_date”: “2022-12-31”}

}

]} llama3-70b-instruct

Appropriately selects ‘VAE’ because the default technique and identifies the post-COVID interval.

“Utilizing DDPM, generate implied vol surfaces just like Q1 in 2024.” {“situations”: [

{

“method”: “DDPM”,

“object_type”: “implied vol surfaces”,

“period”: {

“start_date”: “2024-01-01”,

“end_date”: “2024-03-31”}

}

]} mixtral-8x22b-instruct-v0.1

Appropriately dates Q1 of 2024.

“Generate yield curves just like the two-week interval surrounding the Flash Crash.” {“situations”: [

{

“method”: “VAE”,

“object_type”: “yield curve”,

“period”: {

“start_date”: “2010-04-30”,

“end_date”: “2010-05-13”}

}

]} llama3-70b-instruct

Appropriately dates the Flash Crash on Might 6, 2010, and a 2-week interval round it.

“Simulate inflation curves just like these of final yr.” {“situations”: [

{

“method”: “VAE”,

“object_type”: “inflation curve”,

“period”: {

“start_date”: “2022-01-01”,

“end_date”: “2022-12-31”}

}

]} llama3-70b-instruct

Warning: What’s the mannequin’s information deadline? Discover out from the mannequin card within the NVIDIA catalog. On this case, it’s March 2023, so “final yr” for this mannequin was 2022. “Simulate yield curves and swaption vol surfaces on the peak of the monetary disaster.” {“situations”: [

{

“method”: “VAE”,

“object_type”: “yield curve”,

“period”: {

“start_date”: “2008-09-15”,

“end_date”: “2008-10-15”}

},

{

“method”: “VAE”,

“object_type”: “swaption volatility surface”,

“period”: {

“start_date”: “2008-09-15”,

“end_date”: “2008-10-15”

}}]} meta/llama3-70b-instruct

Appropriately identifies two varieties of market objects, in addition to the height of the monetary disaster (Lehman Brothers collapsed on September 15, 2008.)

Desk 1. Situation era Q&A examples obtained with NVIDIA NIM

Market construction evaluation utilizing generative fashions

Monetary markets are inherently complicated, characterised by noisy, high-dimensional information usually noticed as multivariate time collection. Detecting fleeting patterns that result in monetary features at scale requires intelligent modeling and substantial computations.

One method to scale back dimensionality is to contemplate the intrinsic construction current within the information: curves, surfaces, and higher-dimensional buildings. These buildings carry data that may be leveraged to scale back complexity. They may also be seen as items of knowledge to be embedded and analyzed by way of latent areas of generative fashions. On this part we overview examples of how VAEs and DDMs can be utilized on this context.

VAEs for studying the distribution of market curves

Bond yields, swap, inflation, and international alternate charges will be regarded as having one-dimensional term-structures, corresponding to zero-coupon, spot, ahead, or foundation curves. Equally, possibility volatilities will be seen as (hyper-)surfaces in 2D or larger dimensional areas. A typical swap curve may have as many as 50 tenors. As an alternative of learning how the 50 corresponding time collection relate to one another, one can take into account a single time collection of swap curves and use a VAE to study the distribution of those objects, as detailed in Multiresolution Sign Processing of Monetary Market Objects.

The power of this strategy lies in its capacity to combine beforehand remoted information: the conduct of market objects, historically modeled in isolation both by foreign money (USD, EUR, BRL, for instance) or by activity (state of affairs era, nowcasting, outlier detection, for instance), can now be built-in into the coaching of a single generative mannequin reflecting the interconnected nature of markets.

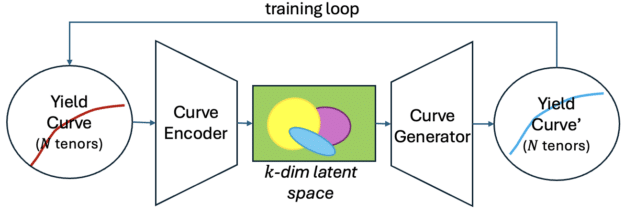

Determine 4 illustrates the coaching loop of a VAE on market information objects corresponding to yield curves: an encoder compresses the enter objects right into a latent house with a Gaussian distribution, a decoder reconstructs curves from factors on this house. The latent house is steady, specific, and often lower-dimensional, making it intuitive to navigate. Curves representing completely different currencies or market regimes cluster in distinct areas, permitting the mannequin to navigate inside or between these clusters to generate new curves unconditional or conditional on desired market traits.

Determine 4. VAE coaching loop on market information corresponding to yield curves

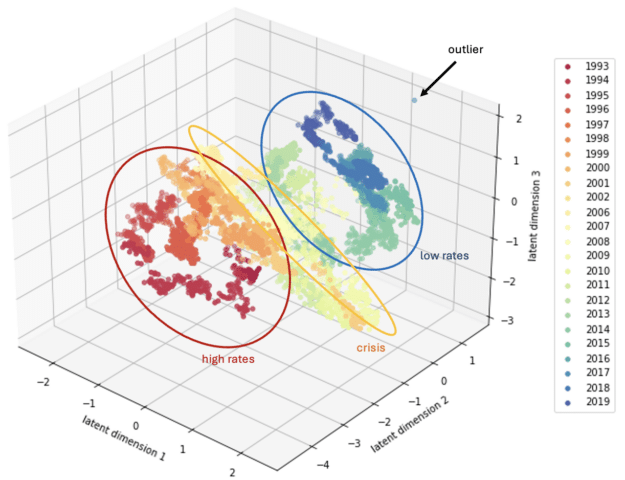

Specifically, the era course of will be conditioned on particular historic intervals to generate curves that will have related shapes to historic ones, with out being precise replicas. For instance, the U.S. Treasury yield curves equivalent to the beginning of the COVID-19 pandemic are proven in Determine 2 (left) as circles within the 3D latent house of a VAE that has been skilled on yield curve information. Since VAE latent areas are steady, they naturally render themselves to defining neighborhoods round factors of curiosity and to sampling from these neighborhoods to generate novel yield curve situations which might be related (however not the identical) as historic ones, proven as triangles in Determine 2 (proper).

Determine 5 reveals a extra full image of the yield curves used for coaching the VAE mannequin, together with clusters grouped by the extent of charges, in addition to an outlier equivalent to the mistaken inclusion of a knowledge level when the Treasury markets had been closed (Good Friday, 2017). One can simply think about extra purposes past state of affairs era, together with non-linear issue decompositions, outlier detection, and plenty of extra.

Determine 5. Within the 3D latent house of a VAE skilled on U.S. Treasury curves information (1993-2019), the monetary disaster varieties a definite cluster, highlighting a big deviation from pre-crisis situations and transitioning into a novel post-crisis low-rates atmosphere

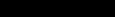

DDMs for studying volatility surfaces

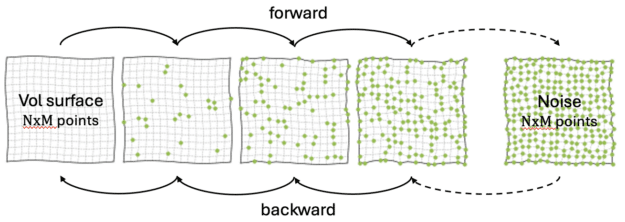

DDMs strategy the generative course of by way of the prism of reversible diffusion. As proven in Determine 6, they function by regularly introducing noise into the info till it turns into a regular Gaussian. Then they study to reverse this course of to generate new information samples ranging from pure noise. Noise is added regularly within the ahead cross, till the ensuing object is indistinguishable from Gaussian noise. In the course of the backward cross, the mannequin learns the denoising wanted to reconstruct the unique floor. To study extra, see Generative AI Analysis Highlight: Demystifying Diffusion-Primarily based Fashions.

Determine 6. Excessive-level structure of a DDM skilled to study the distribution of 2D market objects corresponding to volatility surfaces

To study the distribution of implied volatility surfaces, the flexibility of a DDM (particularly a DDPM, or denoising diffusion probabilistic mannequin) is explored. This instance makes use of an artificial information set of roughly 20,000 volatility surfaces generated with the SABR (stochastic alpha-beta-rho) stochastic volatility mannequin described in Managing Smile Threat (topic to preliminary situations  and

and  ):

):

An instance enter floor is proven in Determine 7.

The purpose is to guage the flexibility of such a mannequin to recuperate the enter information distribution, on this case, a SABR distribution. Within the case of empirical surfaces implied straight from market costs of choices, the distribution could be unknown and having a instrument that may seize the distribution in a non-parametric method would supply a beneficial various to sparse parametric fashions that don’t have enough levels of freedom to signify the info. It could actually subsequently be used to generate volatility floor situations, or to fill in lacking areas in believable methods.

Determine 7. Instance of an enter SABR floor used for mannequin coaching

A simplified model of this structure was tailored for this work. The inputs are  grids of implied volatilities corresponding to varied possibility moneyness and time to maturity pairs. Different varieties of inputs, corresponding to volatility cubes (moneyness-maturity-underlying) or different variations could possibly be tackled in related trend.

grids of implied volatilities corresponding to varied possibility moneyness and time to maturity pairs. Different varieties of inputs, corresponding to volatility cubes (moneyness-maturity-underlying) or different variations could possibly be tackled in related trend.

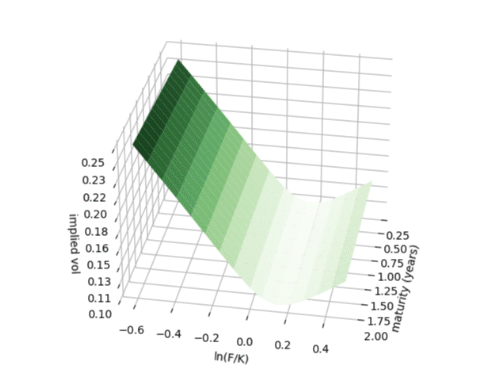

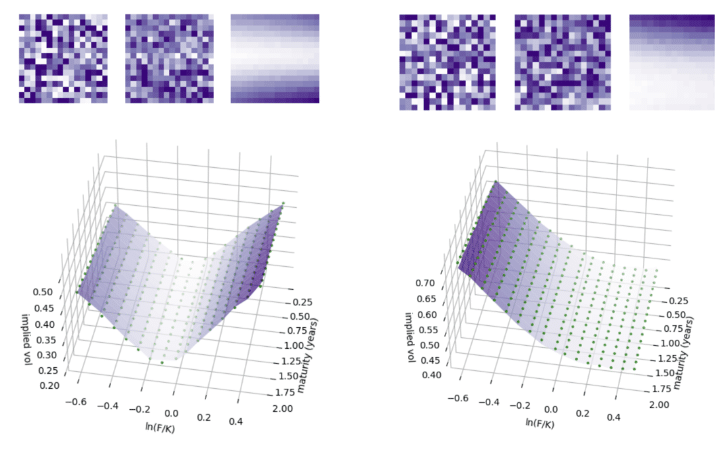

Determine 8 illustrates just a few steps within the coaching of the DDPM mannequin on volatility floor inputs.

Determine 8. Just a few coaching steps: starting with pure noise, the mannequin progressively learns the noise course of

Determine 9 reveals two synthetically-generated surfaces which have subsequently been fitted with SABR fashions to confirm that their shapes are SABR-like. Inexperienced factors signify SABR surfaces fitted to the generated ones, verifying that the DDPM mannequin has realized the SABR-like shapes.

Determine 9. Transition from noise to a generated pattern, displaying an intermediate noisy floor (high) and newly generated volatility surfaces (in purple, backside)

Pattern implementation

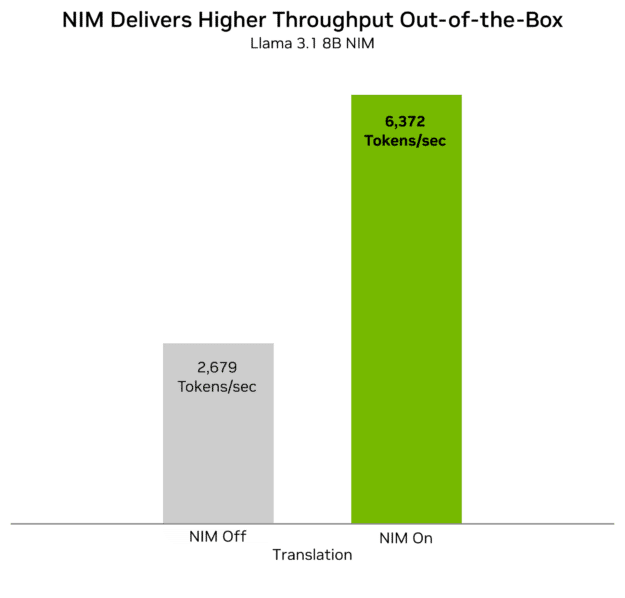

This part presents an instance of utilizing NVIDIA-hosted NIM endpoints together with Llama 3.1 70B Instruct LLM to construct the LLMQueryInterpreter part of the reference structure in Determine 1. Be aware that many different NVIDIA in addition to open-source LLMs can be found with NIM, together with Nemotron, Mixtral, Gemma, and plenty of extra. Accessing these by way of NIM ensures that they’re optimized for inference on NVIDIA-accelerated infrastructure and presents a fast and simple method to evaluate responses from a number of fashions.

import openai

import os

from langchain_nvidia_ai_endpoints import ChatNVIDIA

# NVIDIA API configuration

NVIDIA_API_KEY = os.environ.get("NVIDIA_API_KEY") # click on “Get API Key” at https://www.nvidia.com/en-us/ai/

if not NVIDIA_API_KEY:

increase ValueError("NVIDIA_API_KEY atmosphere variable just isn't set")

NVIDIA_BASE_URL = "https://combine.api.nvidia.com/v1"

shopper = openai.OpenAI(base_url=NVIDIA_BASE_URL, api_key=NVIDIA_API_KEY)

class LLMQueryInterpreter:

""" NVIDIA NIM-powered class that processes state of affairs requests from consumer."""

def __init__(self, llm_name="meta/llama-3.1-70b-instruct", temperature=0):

self._llm = ChatNVIDIA(mannequin=llm_name, temperature=temperature)

# outline output JSON format

self._scenario_template = """

{"situations": [

{

"method": <generative method name, e.g., "VAE">,

"object_type": <object type, e.g., "yield curve">,

"period": {

"start_date": <start date of event>,

"end_date" : <end date of event>

}

},

{

"method": <generative method name, e.g., "DDPM">,

"object_type": <object type, e.g., "volatility surface">,

"period": {

"start_date": <start date of event>,

"end_date" : <end date of event>

}

},

// Additional scenarios, as needed

] }

"""

# directions for the LLM

self._prompt_template = """"

Your activity is to generate a single, high-quality response in JSON format.

Format the output in line with the next template: {scenario_template}.

Supply a refined, correct, and complete reply to the instruction.

Each question has a construction of this kind:

"Use <technique> to generate <market_object_type> just like <interval>".

Legitimate strategies are "VAE" or "DDPM"; use "VAE" by default, if no technique is specified.

If a distinct technique is requested, return "invalid" as the strategy kind.

Reply the next question with none extra explanations, return solely a JSON output:

{question}

"""

def process_query(self, question):

# question = "Utilizing a VAE mannequin, output yield curves just like these within the second half of 2020"

llm_request = self._prompt_template.format(**{ "question": question, "scenario_template": self._scenario_template })

llm_answer = self._llm.invoke(llm_request)

return llm_answer.content material

# Instance utilization

question = "Utilizing a VAE mannequin, output yield curves just like the Flash Crash"

llm = LLMQueryInterpreter()

print(llm.process_query(question))

# Output

{

"situations": [

{

"method": "VAE",

"object_type": "yield curve",

"period": {

"start_date": "2010-05-06",

"end_date": "2010-05-06"

}

}

]

}

Conclusion

It’s thrilling to think about a future the place quants, merchants, and funding professionals more and more collaborate with AI instruments to mannequin and discover monetary markets. The mixing of those superior instruments enhances monetary modeling and market exploration, promising to drive ahead the capabilities and insights of market members. They are often mixed in modern methods and served with ease utilizing NVIDIA NIM.