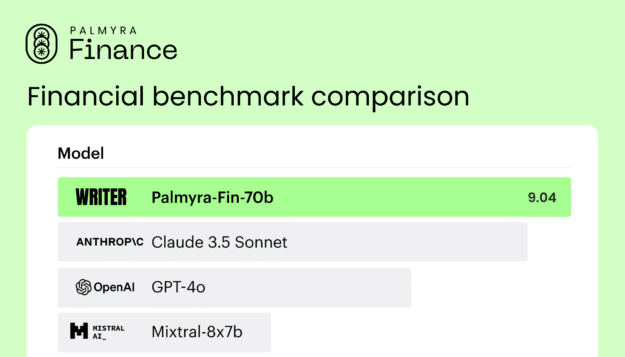

Author Releases Area-Particular LLMs for Healthcare and Finance

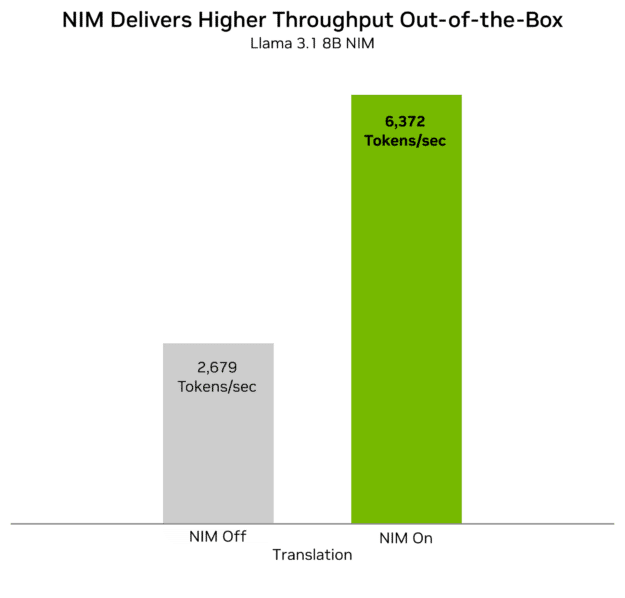

Author has launched two new domain-specific AI fashions, Palmyra-Med 70B and Palmyra-Fin 70B, increasing the capabilities of NVIDIA NIM. These fashions deliver unparalleled accuracy to medical and monetary generative AI...

Read more